Imagine with me for a minute.

Imagine a world where analytics data always tells the truth. A world where insights are trustworthy and tracking technology always works like you expect it to.

Everything is in order, and your life is a dream.

But life isn’t a dream. Achieving high-quality data isn’t easy. Managing an analytics implementation is often chaotic. So we need to be more realistic and just accept that data is never going to be perfect and take what we have and move on, right?

Not right.

It’s true, we don’t live in a perfect world, and data will never be perfect. But there is a way to bring that dream of better data for better decisions down to earth for a more grounded analytics strategy.

What’s the vehicle for doing so? The analytics test plan.

What is an analytics test plan?

An analytics test plan is a documented plan for how to test your analytics implementation. It outlines which components you want to test (i.e. tags, variables, etc.) and under which scenarios you want to test them.

As dramatic as I made the analytics test plan sound, there are real, tangible benefits to implementing one, over and over again. Those benefits include:

- Better data quality for better insights in decision-making

- A more positive user experience

- Greater value from MarTech

So how can you create and execute an analytics test plan? Let’s dig into the technologies you need to be aware of.

What technologies should I test?

Your analytics stack isn’t built from a single technology.

Robust analytics and marketing stacks incorporate multiple technologies to collect and manipulate data before ending up in your reports.

As such, you should include the following components of the analytics stack as part of your test plan:

1. Your data layer

2. Your tag management system

3. Your analytics solution

4. DOM elements

Why not just the analytics solution? As you well know, collecting data into your analytics platform is the last step in a multilayer process. Your data layer and your TMS play a critical role in collecting and distributing data to your various analytics and marketing platforms. And so, including the data layer and your tag management system as part of your analytics test plan is critical.

In addition to TMSs and data layers, I’ve also seen many companies test DOM elements as part of their governance processes. These companies have foundational DOM elements or other JavaScript objects on their sites that their TMS or data layer references. If an element fails, so does their analytics stack.

Consequently, they incorporate these critical DOM elements and JavaScript objects into their test plans. Taking this approach allows them to test every layer of your analytics process, so the data coming in at the bottom of the stack comes out the top ready to be used for decision making.

Be more efficient

Incorporating all the above technologies not only provides confidence in data accuracy, but also efficiency in diagnosing problems.

Say your top KPI stopped tracking right now. A little scary, right? You’re left wondering, “At what point did my analytics stack break down and stop collecting the data?”

Recognizing the different technologies in the stack can help you better deduce where the problem is occurring.

For example, if you first look to your data layer and find that the data point has been correctly captured there, then you can deduce that you must have a problem in the TMS where a rule isn’t executing properly. But if the data layer doesn’t have the data point, you must have an error elsewhere in populating the data layer, for which you might look to the DOM elements to see if any of them have failed at that level.

How do we build an analytics test plan?

Now that I’ve made a case for considering the entire analytics stack when building your analytics test plan, I’m going to add a disclaimer. You can’t test every data layer value, every analytics variable, every TMS rule every time you release new code (at least not manually). That would be an endeavor of Herculean proportions. So you’ll need to prioritize.

Where to start?

Prioritize

First and foremost, test the elements directly affected by the release. That’s bare minimum. If you deployed a new variable, test that variable. A new tag? Test it. In some cases there isn’t much more you can do, since your mode of testing right now is probably highly manual with some automation. Your resources are limited.

But if possible, best practice is to include any other scenario that potentially (though inadvertently) was affected by the release. Conflicting JavaScript happens. Rule failures happen. It would be a shame to have them go unnoticed.

Of course, this broadens the scope of your testing. As with most things in your business and personal life, you’ll want to follow the 80/20 rule. There’s likely a small subset of your data that provides 80% of the value for the reporting and operation of your business. Test that first.

Below are some questions you can ask yourself to identify that subset providing 80% of the value.

Where would data loss hurt most for us?

Which variables in your analytics platform most significantly impact your operational reporting and decision-making? Those are typically your KPIs and maybe a handful of variables that are the foundation of your reporting. Test these.

Where would data loss hurt most for our customers?

Which data points most directly affect key end user experiences? This question is especially pertinent if you’re using personalization tools like Adobe Target. Test these too.

So now that we have a good understanding of what to test, let’s go through some example scenarios.

Example 1: Update to a CMS template

Let’s say that we’re updating a couple content management system templates on our site. In this situation, the bare minimum to include in our analytics test plan would be the page load and click actions that are tracked on the updated templates.

But, if possible, I would recommend testing all of the templates managed through your CMS. There are likely some shared resources being updated in those few templates, such as JavaScript or HTML files. Changes to these resources could potentially impact the rest of your analytics collection on other CMS templates.

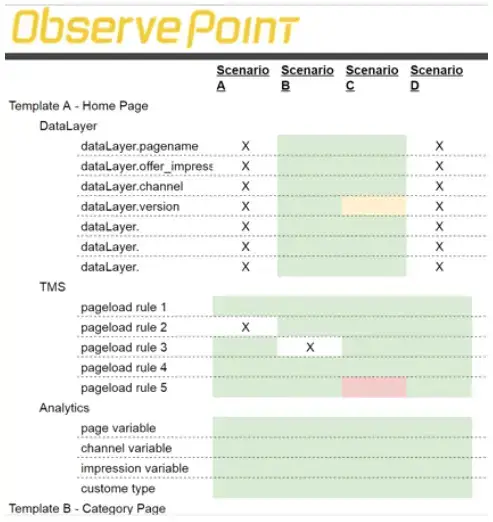

Here is an example of what an analytics test plan might look like for this example:

In the columns are the different scenarios. In this case, each of these scenarios could be an individual template or a group of templates. In the plan we would clarify whether we’re testing for page load or event-based tracking.

Note that the plan tests each piece of the analytics stack, including data layer, TMS, and analytics variables. With an outline like this, you could easily check off what’s working and what’s not as you execute the test plan.

Example 2: Update to a critical user path

In this example, let’s suppose you’re a travel site and you are updating the booking engine—the most important flow on your site.

In this case, the must-have test would be to test all of the steps that have been changed. But because this is such a critical path (you’re a travel agency and this is your primary booking engine), you’re better off testing the entire flow.

I was consulting at a travel agency several years ago, and after a particular release, we saw a 15% drop in revenue. In this instance, we did not execute a comprehensive test plan and it took us several weeks to find the problem.

We finally discovered that there was a specific cash-paying persona (one we had not tested for) that was not being tracked properly, causing the drop in revenue. We could have saved several weeks if we had planned our tests better.

When should I execute an analytics test plan?

Timing is everything. When you execute your analytics test plan is just as important as what you’re testing. Below are seven occasions where an analytics test plan can have the most impact for making sure you keep collecting actionable data for decision-making. They are listed in roughly the order of most important to least important, but individual needs may vary.

1. Deploying a TMS change

You should always do at least some testing before deploying a tag management system change. TMS change? Cue test plan.

If you decide not to execute an analytics test plan, you either need to be very confident or a heavy risk taker to be able to make a deployment without testing in your TMS. That, or you already have an existing issue that is affecting customer experiences, and you need to get a hotfix out as quickly as possible. In either case, you need to be willing to risk that this won’t make things worse.

As I’ve interacted with analytics professionals over the years, it seems like everybody that works in developing tag management system logic has a story of how they’ve pushed a bug into production. In each case, it was a stressful and embarrassing situation. If you’re a tag management developer and you haven’t had that experience yet, hopefully by executing a test plan before making a change, you can avoid being added to that list.

Whether you just:

- Updated the logic inside of a specific rule

- Added a new rule

- Added logic to when that rule should execute

- Added a brand new vendor, or

- Moved a vendor

under each of those scenarios there can be additional conflicts that happen with other tags. Be prepared to test.

2. Deploying an application update

You should also plan on testing with each application update. When your development team makes changes to your content management system, ecommerce platform, any critical user path, or your account management platform, this would be considered an application update.

Application updates likely occur on a regular release schedule. The changes may not directly impact analytics, but sometimes they do. Sometimes new features require additional analytics, or conflicts could arise.

In each of these situations, your analytics data could potentially have data collection issues. Executing a test plan, therefore, is critical.

3. Deploying new content

When you launch new pages via your content management system, oftentimes there are key variable values given by the content team responsible for filling out all fields and naming specific offers in a specific format. It’s critical to validate those fields have been populated correctly before you push out new content to your management system.

*Special note about microsites and landing pages: Microsites and landing pages are especially vulnerable to analytics errors. Microsites and landing pages are oftentimes outliers in that they don’t necessarily follow the same standards as other templates. Straying from these standards becomes more likely if your content is being launched by a third-party agency. Building and executing an analytics test plan for these custom templates can eliminate analytics errors.

4. Sending an email campaign

The above test cases have mostly focused on IT or development types. For these next two test cases we’re going to switch over to marketing-driven processes, whose goal is to provide meaningful data for marketers to act upon.

Let’s consider the importance of testing before email campaigns.

When you’re sending an email campaign, you face the risk of your landing pages’ analytics tags breaking down, making it impossible for you to collect any data on the email’s performance. To mitigate this risk, you will need to validate that each of the URLs inside of that email are landing on URLs that have a working redirect page that captures the analytics data you require. Then, similarly, when the final target page actually loads, that the right tags on that page are working properly.

With a lot of moving parts that could potentially cause some data issues with your email campaign, make sure to test before you send.

5. Launching a paid marketing campaign

A similar test case to the email campaign is the paid marketing campaign. Whether via paid search, video, display, or any of the paid marketing avenues available, you’re paying to bring people to your site. In any event, it’s critical that your landing page analytics function properly so you can measure the return on ad spend for those initiatives.

Similar to the email test case, with paid marketing you want to check that the correct tags (particularly analytics tags), data layer, tag management system, and DOM elements are present on the target landing pages as prospects click through from the ad.

6. Updating your data layer

Okay, let’s return back to an IT focus by talking about updates to the data layer.

Most times making updates to your data layer often happens in parallel with updates to your application. But at other times you might update the data layer logic or the data layer itself independent of an application change.

Tag management roles pivot off of being able to correctly identify specific values in the data layer. Even something as simple as changing the capitalization of one letter of the values in your data layer can have a significant impact on your data collection. When that happens, those situations can negatively impact your data collection in your analytics platform.

Test your data layer.

7. Launching an A/B test

The last test case is for when you’re launching some form of marketing test such as an A/B test, a targeting test, or a segment-based test.

Oftentimes companies integrate their marketing tests into their analytics platform so that in addition to doing KPI analysis on each test variant, they can also do longer term analysis in their analytics platform as to the long term impacts of a test.

Each time you launch a test, there’s a risk that data collection won’t happen correctly in your data analytics platform. To mitigate that risk, you’ll want to test your analytics alongside your optimization platform to verify your tags don’t break down.

Get the Conversation Started

In many companies I’ve worked with, the conversation about analytics testing had never happened before I arrived. But in order for the above test cases to occur, your organization needs to be on the same page. The conversation needs to happen.

Wherever your analytics testing center lives within the organization, conducting all these tests is likely going to require some coordination across departments. As such, you need to be able to communicate not just the what of the analytics test plan, but more importantly the why, so that everyone can be on board. You can use some of the reasons I laid out earlier, namely:

- Better data quality for better insights in decision-making

- A more positive user experience (think of the A/B Testing example)

- Greater value from MarTech (think of the email and paid advertising examples)

- Increased efficiency due to proactive testing

Once your devil’s advocates understand the why, you can dig into what the analytics test plan is, and show how to execute the analytics test plan in each of the above situations.

How can I automate the process?

We’ve talked about how to structure an analytics test plan and when to conduct one. Now how do you carry it out?

Option one is to hire a bunch of folks to execute these test plans each time in each of these scenarios. That would be a resource ask of titanic proportions—an ask your upper management might not deem worthy of pursuing. You would meet opposition or at the very least, it would be a lengthy business process to prove and identify the value to your organization to invest in those resources.

Option two is to minimize expensive human resources and automate these test cases. Through automation, you can use a fraction of the resources that you might need to invest in optimizing and running the test plan manually.

ObservePoint automates your analytics test plans via WebAssurance, its flagship tag governance solution. WebAssurance can help you dramatically increase efficiency in executing your analytics test plans. In one recent case, Japanese company Recruit used ObservePoint to reduce their analytics testing team from four full-time employees to less than one full-time employee, resulting in significant cost savings and more accurate data.

To learn more about how ObservePoint can automate the process of validating your analytics tags, schedule a demo today. Good luck testing!